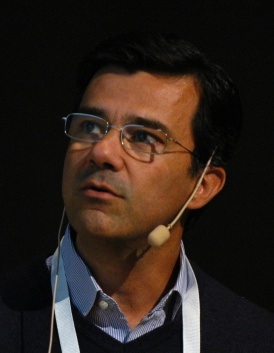

Marcello Pelillo is Professor of Computer Science at Ca' Foscari University in Venice, Italy, where he directs the European Centre for Living Technology (ECLT) and the Computer Vision and Pattern Recognition group. He held visiting research positions at Yale University, McGill University, the University of Vienna, York University (UK), the University College London, the National ICT Australia (NICTA), and is an Affiliated Faculty Member of Drexel University, Department of Computer Science. He has published more than 200 technical papers in refereed journals, handbooks, and conference proceedings in the areas of pattern recognition, computer vision and machine learning. He is General Chair for ICCV 2017, Track Chair for ICPR 2018, and has served as Program Chair for several conferences and workshops, many of which he initiated (e.g., EMMCVPR, SIMBAD, IWCV). He serves (has served) on the Editorial Boards of the journals IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI), Pattern Recognition, IET Computer Vision, Frontiers in Computer Image Analysis, Brain Informatics, and serves on the Advisory Board of the International Journal of Machine Learning and Cybernetics. Prof. Pelillo has been elected a Fellow of the IEEE and a Fellow of the IAPR, and has recently been appointed IEEE SMC Distinguished Lecturer. His Erdos number is 2.

In this talk I’ll describe a framework for pattern recognition problems which is grounded in the primacy of relational and contextual information at both the object and the category levels. I’ll deal with semantic categorization scenarios involving a number of interrelated classes, the common intuition being the idea of viewing classification problems as non-cooperative games, whereby the competition between the hypotheses of class membership is driven by contextual and similarity information encoded in terms of payoff functions. Contrary to standard classification algorithms, which are based on the idea of assigning similar objects to the same class labels, thereby neglecting category-level similarities, our model will conform to the more general “Hume’s similarity principle” which prescribes that similar objects should be assigned to similar categories. According to this perspective, the focus shifts from optimality to Nash equilibrium conditions. Particular emphasis will be given to evolutionary game-theoretic models which offer a fresh dynamical systems perspective to learning and classification problems. Example applications of these ideas will be presented which show the effectiveness of the approach.

Ivan Laptev is a senior researcher at INRIA Paris, France. He received a PhD degree in Computer Science from the Royal Institute of Technology in 2004 and a Habilitation degree from École Normale Supérieure in 2013. Ivan's main research interests include visual recognition of human actions, objects and interactions. He has published over 60 papers at international conferences and journals of computer vision and machine learning. He serves as an associate editor of IJCV and TPAMI journals, he will serve as a program chair for CVPR’18, he was an area chair for CVPR’10,’13,’15,’16 ICCV’11, ECCV’12,’14 and ACCV’14,16, he has co-organized several tutorials, workshops and challenges at major computer vision conferences. He has also co-organized a series of INRIA summer schools on computer vision and machine learning (2010-2013). He received an ERC Starting Grant in 2012.

Recent progress in visual recognition goes hand-in-hand with the supervised learning and large-scale annotated training data. While the amount of existing images and videos is huge, their detailed annotation is expensive and often ambiguous. To address these issues, in this talk we will focus on weakly-supervised learning using incomplete and noisy supervision for training. In the first part I will discuss recognition from still images and will describe our work on weakly-supervised CNNs for localizing objects, human actions and object relations. The second part of the talk will focus on learning from videos. I will show examples of learning human actions from movie scripts and narrations in YouTube instructional videos. I will also present our new synthetic dataset enabling person segmentation and depth estimation in real videos. Finally, I will describe our video tagging system -- the winner of the YouTube-8M Video Understanding Challenge.

Gavin Brown is Reader in Machine Learning at the University of Manchester, and Director of Research for the School of Computer Science. His work, and that of his team, has been recognized twice (2004, 2013) with awards from the British Computer Society for outstanding UK PhD of the year. The team is currently conducting research on Machine Learning methods for clinical drug trials, methods for predicting domestic violence, and efficient modular deep neural networks, sponsored by the UK and European Union. Gavin is a keen public communicator, engaging in several public events per year on issues around artificial intelligence and machine learning - including several appearances on the BBC children's channel, explaining robots and AI.

We provide a unifying perspective for two decades of work on cost-sensitive Boosting algorithms. When analyzing the literature 1997–2016, we find 15 distinct cost-sensitive variants of the original algorithm. of these has its own motivation and claims to superiority—so who should we believe? In this work we critique the Boosting literature using four theoretical frameworks: Bayesian decision theory, the functional gradient descent view, margin theory, and probabilistic modelling. We find, surprisingly, that almost all of the proposed variants are inconsistent with at least one of the theories. After an extensive empricial study, our final recommendation—based on simplicity, flexibility and performance—is to use the original Adaboost algorithm with a shifted decision threshold and calibrated probability estimates.